-

-

Notifications

You must be signed in to change notification settings - Fork 3.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

kmeans anchors #754

kmeans anchors #754

Conversation

|

@wang-xinyu thanks for your interest in our work and for submitting this PR! We already have a kmeans function that directly generates the anchor string needed to copy and paste into cfg files, however we have not had success in applying these anchors to produce better mAPs on COCO. An example utilization of the function is here. You supply the range of your image sizes (i.e. for multiscale training), your anchor count, and your data file path. Lines 742 to 744 in 2328823

|

|

@wang-xinyu hmm interesting, I looked at the lars repo, it seems their 'average IoU' metric must be the average of the best IoU's among all the 9 anchors. In this repo we display that metric as a 'best' average IoU on the final line. If I run kmeans_anchors() on coco/train2017.txt for example under a 320-640 img-size multiscale assumption I get 0.57 best-IoU: from utils.utils import *

_ = kmean_anchors(path='../coco/train2017.txt', n=9, img_size=(320, 640))

Caching labels (117266 found, 1021 missing, 0 empty, 0 duplicate, for 118287 images): 100%|██████████| 118287/118287 [00:52<00:00, 2246.04it/s]

Running kmeans on 849942 points...

0.10 iou_thr: 0.982 best possible recall, 4.3 anchors > thr

0.20 iou_thr: 0.953 best possible recall, 3.0 anchors > thr

0.30 iou_thr: 0.910 best possible recall, 2.1 anchors > thr

kmeans anchors (n=9, img_size=(320, 640), IoU=0.01/0.18/0.57-min/mean/best): 15,15, 28,47, 72,42, 57,106, 149,93, 111,201, 291,167, 205,340, 454,329For a fixed img-size of 512 I get the same mean value of 0.57 best-IoU: _ = kmean_anchors(path='../coco/train2017.txt', n=9, img_size=(512, 512))

Caching labels (117266 found, 1021 missing, 0 empty, 0 duplicate, for 118287 images): 100%|██████████| 118287/118287 [00:54<00:00, 2179.55it/s]

Running kmeans on 849942 points...

0.10 iou_thr: 0.984 best possible recall, 4.4 anchors > thr

0.20 iou_thr: 0.957 best possible recall, 3.1 anchors > thr

0.30 iou_thr: 0.915 best possible recall, 2.2 anchors > thr

kmeans anchors (n=9, img_size=(512, 512), IoU=0.01/0.18/0.57-min/mean/best): 16,17, 31,49, 78,46, 60,114, 152,99, 115,216, 286,165, 219,351, 447,301What values do you get when you run the lars anchors on the COCO data @wang-xinyu? |

|

@glenn-jocher Oh sorry I didn't notice there already has kmeans anchors in this repo. I didn't try COCO data, I run kmeans on my own data, for head detection, and use new anchors to train, and didn't make it to get better result either. But I think it reasonable to use custom anchors clustered from train data. Maybe the custom anchors cannot make a big impact on mAP, as the features are trained to adapt the final bbox. And I think your measurement of best-IoU is more reasonable than lars's Average-IoU. But the key is kmeans, not how to measure IoU, so your implementation and lars are similar, no big difference. And no need to merge my PR... Thanks for your patience. |

|

@glenn-jocher |

|

@xyl-507 I would highly suggest you use our YOLOv5 repo with auto anchor. It automatically checks your anchors against your data and evolves new anchors for you if you need them. This is the default behavior in v5 for all trainings. |

|

@glenn-jocher |

|

@xyl-507 overfitting is a phenomenon that larger models are more prone to. YOLOv3-tiny is honestly a very poor model, with quite low capabilities, so it would be very hard to overfit 40,000 images with this model. Finetuning (aka starting from pretrained weights) is a good method for training smaller datasets, and in particular for achieving results quickly. Larger datasets benefit from it to a lesser degree. I would repeat your above experiments with YOLOv5s in place of YOLOv3-tiny (it trains to more than 2x the mAP on COCO, and is the same size). Additionally, YOLOv5 benefits from numerous bug fixes and feature additions absent from YOLOv3. |

|

@glenn-jocher your reply is very helpful for me. Excuse me for asking so many questions. |

|

v5 buddy. There’s zero sense pondering issues that may already be resolved there. |

|

@glenn-jocher OK,I move to v5 repo! Thanks! |

|

@xyl-507 yes, definitely move to https://github.com/ultralytics/yolov5. I can't emphasize enough the enormous improvement we've made just in the last few months from this repo to our YOLOv5 repo. We have over 30 contributors there as well providing PRs and helping align it with best practices. Our intention to make YOLOv5 the simplest, most robust, and most accurate detection model in the world. |

|

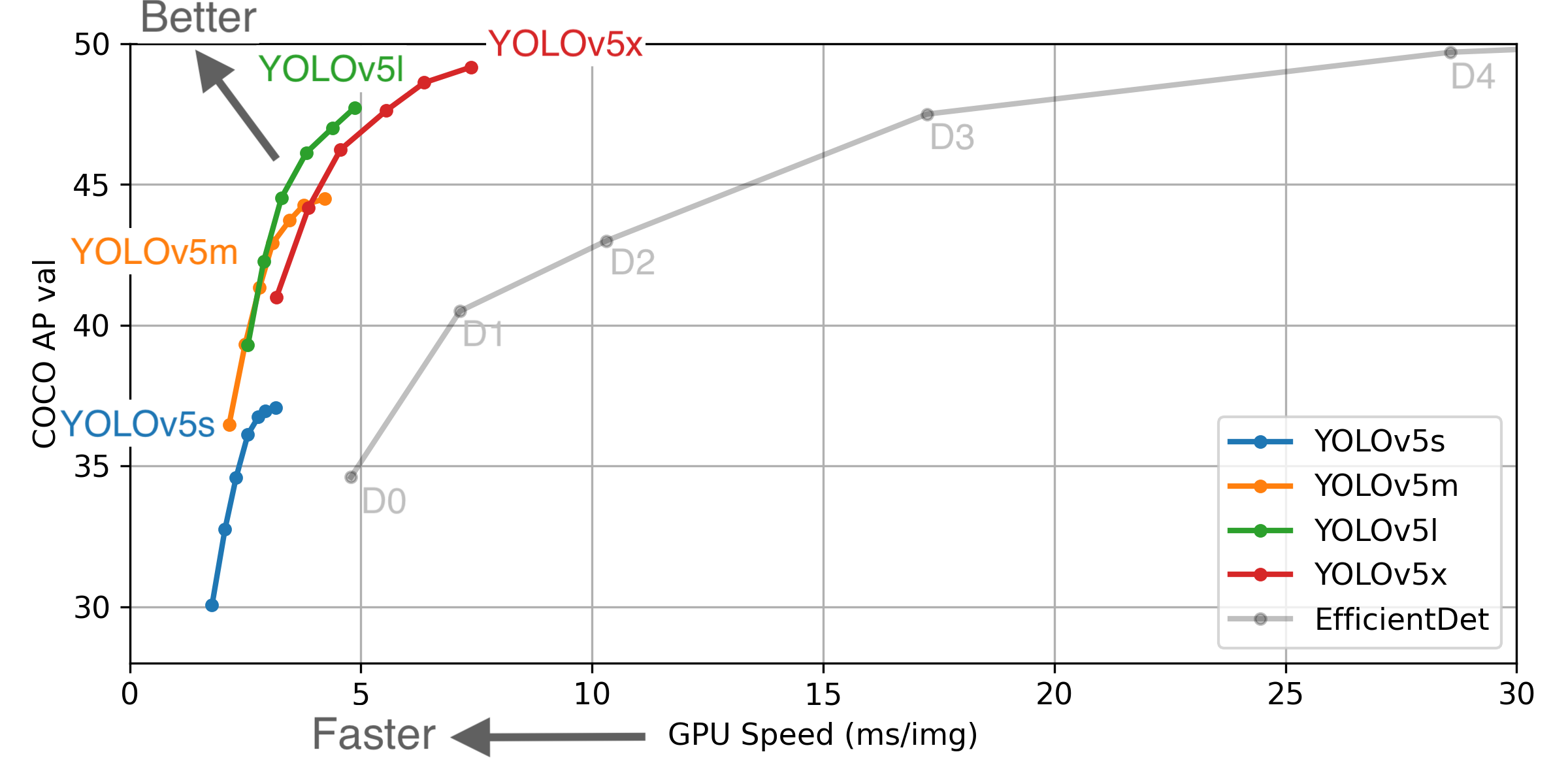

Ultralytics has open-sourced YOLOv5 at https://github.com/ultralytics/yolov5, featuring faster, lighter and more accurate object detection. YOLOv5 is recommended for all new projects.

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from [google/automl](https://github.com/google/automl) at batch size 8.

Pretrained Checkpoints

** APtest denotes COCO test-dev2017 server results, all other AP results in the table denote val2017 accuracy. For more information and to get started with YOLOv5 please visit https://github.com/ultralytics/yolov5. Thank you! |

Hi,

I adapt lars76/kmeans-anchor-boxes to this project, loading the data with yolo label format, and run k-means clustering on the dimensions of bounding boxes to get good priors.

I think it would be helpful to train on custom datasets, because the default anchors from paper are clustered from VOC and COCO.

🛠️ PR Summary

Made with ❤️ by Ultralytics Actions

🌟 Summary

Introducing a new script for generating custom anchor boxes using k-means clustering.

📊 Key Changes

gen_anchors.pyscript:kmeans.pywithin theutilsfolder:🎯 Purpose & Impact