-

-

Notifications

You must be signed in to change notification settings - Fork 2.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add cooperative task yielding #2160

Conversation

|

Oh, and for those of you who enjoy reading things, here is Go's approach (which is very different, but also relies much more on a runtime): https://github.com/golang/go/blob/master/src/runtime/preempt.go |

|

One could certainly write a proc macro attribute to insert checks in all loops. |

|

@llogiq well that escalated quickly... 😆 |

tokio/src/task/preemption.rs

Outdated

| /// Note that as more preemption points are added in the ecosystem, this value will probably also | ||

| /// have to be raised. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I wonder if, in the future, there might be some way to determine this adaptively...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Great start. I added some thoughts inline.

I would suggest starting without any public API. Instead, limit this to Tokio primitives.

One potential issue is if Tokio primitives are updated to hook into the operation counter, the current implementation would bind them to the Tokio runtime as other runtimes wont reset the budget.

We probably will want to only track the budget if we know that the budget is reset between polls.

|

Also, we discussed this some in Discord, but IMO the budget should only be decremented when using leaf resources and not at branches (like iteration). The reasoning is that, unlike runtimes such as erlang and golang, we do not have a method by which we can weigh the cost of intermediate points in the task. Also, we are not trying to solve cpu bound logic on an asynchronous task, only the case where an asynchronous resource is always ready. |

|

Finally, I would be interested in hooking this into tokio resources then testing something like tokio-minihttp w/ |

|

This is admittedly a bikeshed, but I'm also not really convinced about the usage of the term "preemption" here. As I see it, explicitly marking potential yield points is not preemption; to me, the term implies that the preempted code is unaware that the scheduler will switch execution to a different unit of work, and that preemption may occur anywhere, rather than at designated yield points. This is, admittedly, splitting hairs, but there are some implications I think of when I see that terminology... |

|

@hawkw we can punt all naming bikeshedding by avoiding public APIs for now 😆 |

|

I think "preemption" is actually the right name in a strict technical sense because we are making a thing exit before it was normally ready to exit. But agree that that is bikeshedding, and can be delayed. I've pushed more ridiculous names to indicate that this is private and not final. |

|

@carllerche I'm still not sure whether only tracking leaf futures or also intermediate things is the way to go. While I agree that we (probably) aren't going to preempt CPU-bound tasks this way, I don't see a reason why we explicitly don't want to? It might help alleviate some of the issues with blocking tasks that people currently have to solve with I'll play around with adding annotations to resources. |

|

One awkward thing about annotating leaves and intermediates is that it's usually hard for them to yield after doing work. Take |

|

@jonhoo mostly, at this point, we cannot evaluate the cost of each "branch" future, nor can we guarantee that all branch point swill inject code to update the counter. If one task uses branching logic that updates the counter and another doesn't... that will significantly bias the amount of scheduler time one task gets vs. another... I would err on the side of "keeping it simpler". Add the counting logic in fewer places (the leaves) and see how things behave. |

99% of the time, "it doesn't matter" is my guess. One option would be to dec first, and if Pending is hit, inc again, but my guess is that if you hit pending in a branch... even if you decremented the counter once, it probably won't significantly impact behavior. |

fad2aa9 to

bf7ede7

Compare

|

I mentioned this in a sub-thread, but I wonder if it makes sense to move the little module that does this tracking into its own crate. That way, any crate that provides an executor or a leaf future can depend on it and call its methods, and we would avoid the issues around using non-cooperating leaf futures or executors that do not reset the budget. Thoughts? |

|

@jonhoo well, we are trying to reduce dependencies :) Once we figure out a way to expose it publicly, it can be a feature flag probably so you can limit the |

|

Hmm, so, definitely a performance regression... With $ (master) wrk -t10 -c500 -d10 http://0.0.0.0:8080/plaintext

Running 10s test @ http://0.0.0.0:8080/plaintext

10 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 5.24ms 502.08us 18.36ms 93.22%

Req/Sec 9.59k 740.74 29.17k 92.30%

954162 requests in 10.08s, 117.38MB read

Requests/sec: 94698.33

Transfer/sec: 11.65MB

$ (preempt) wrk -t10 -c500 -d10 http://0.0.0.0:8080/plaintext

Running 10s test @ http://0.0.0.0:8080/plaintext

10 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 5.27ms 528.39us 10.62ms 84.95%

Req/Sec 9.52k 0.85k 30.26k 88.90%

947569 requests in 10.07s, 116.57MB read

Requests/sec: 94125.25

Transfer/sec: 11.58MBWith $ (master) wrk -t10 -c500 -d10 http://0.0.0.0:8080/plaintext

Running 10s test @ http://0.0.0.0:8080/plaintext

10 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 573.78us 410.32us 23.54ms 93.47%

Req/Sec 78.42k 15.86k 112.77k 52.65%

7808327 requests in 10.10s, 0.94GB read

Requests/sec: 773112.94

Transfer/sec: 95.11MB

$ (preeempt) wrk -t10 -c500 -d10 http://0.0.0.0:8080/plaintext

Running 10s test @ http://0.0.0.0:8080/plaintext

10 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 648.79us 0.89ms 38.38ms 99.35%

Req/Sec 73.99k 16.40k 104.67k 49.65%

7367111 requests in 10.10s, 0.89GB read

Requests/sec: 729472.40

Transfer/sec: 89.74MBOn this machine: $ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 46 bits physical, 48 bits virtual

CPU(s): 40

On-line CPU(s) list: 0-39

Thread(s) per core: 2

Core(s) per socket: 10

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 63

Model name: Intel(R) Xeon(R) CPU E5-2660 v3 @ 2.60GHz

Stepping: 2

CPU MHz: 2597.092

CPU max MHz: 2600.0000

CPU min MHz: 1200.0000

BogoMIPS: 5196.60

Virtualization: VT-x

L1d cache: 640 KiB

L1i cache: 640 KiB

L2 cache: 5 MiB

L3 cache: 50 MiB

NUMA node0 CPU(s): 0,2,4,6,8,10,12,14,16,18,20,22,24,26,28,30,32,34,36,38

NUMA node1 CPU(s): 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31,33,35,37,39 |

|

Hmm, the test failure looks pretty suspicious. Somehow this assert occasionally fails (the test is racy)? tokio/tokio/src/sync/semaphore_ll.rs Line 640 in b33ce77

@carllerche Any ideas how the change from this PR might cause that? The only relevant change (I think) is the call to poll_cooperate just above, which means that it'll sometimes return Poll::Pending in poll_acquire where previously it would not?tokio/tokio/src/sync/semaphore_ll.rs Lines 636 to 637 in b33ce77

The relevant panic stack frames are |

|

9d10726 adds the ability to sub-budget. That is, within a scope you can artificially constrain the budget. This is useful if you want to not allow a particular sub-future (like |

|

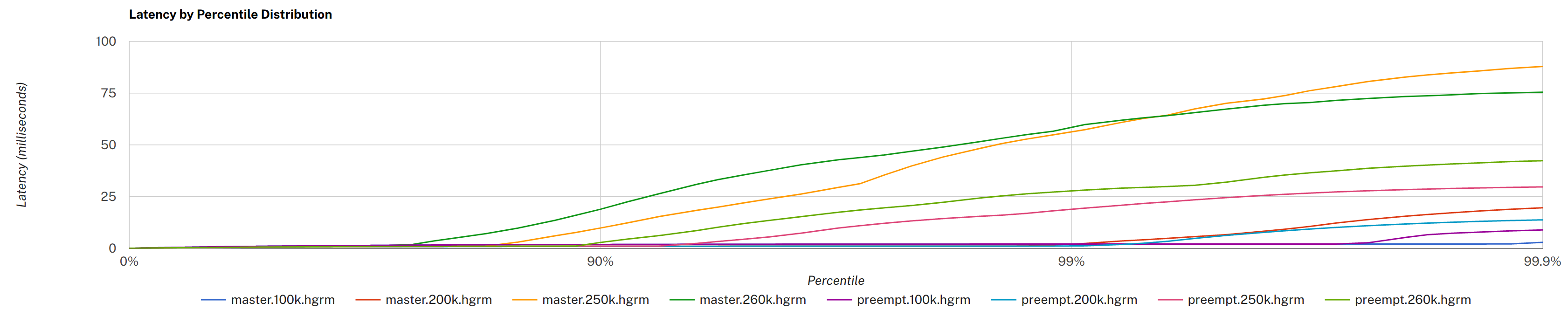

Latency breakdown with

I don't currently have a good way of measuring what the effect is on "maximum throughput", so suggestions are welcome. Note that we do not expect this PR to improve performance — it is instead meant to help guard against degenerate patterns in futures. In particular, since we probably don't have any starvation going on, the PR should only be overhead for this particular benchmark. We know it'll come at a cost, we're just trying to determine what that cost is. |

|

Tests pass 🎉 I think there are currently two blockers. First, it would be good to have a way to measure the overhead of this approach and determining whether it is acceptable. The numbers from benchmarks above give us some sense, but a more wholistic test would be good. Maybe @seanmonstar has some good ones on Second, this changes the behavior of futures outside of tokio that depend on tokio resources in ways that may lead to weird results. In particular, with this change, a future may now enter a state where every subsequent call to |

IMHO, panicking in these cases seems like a bad call — I think it's much better to disable the budgeting for that task. Eventually, we would probably want to log that we're disabling the preemption budget for a task? |

|

The next question becomes how to even track this. I suppose we could keep two counters, one for "budget" and one for "how far over budget" that is only accessed when a yield point is encountered after the budget has been exceeded. What would a reasonable value for when we disable budgeting be? |

|

It seems to me that ✨eventually✨, both "budget" and "how far over budget we go before disabling budgeting" ought to be knobs that are tuneable at the runtime level, though ideally we should definitely provide defaults that nobody reasonable would need to change. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This looks great to me. I had some questions on the placement of yield points in tokio, and a few minor style nits.

|

Test failure should be fixed by #2236. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No blocking comments to merge this. Before making the API public, I would want to bikeshed naming some, but this is fine to merge as soon as it is rebased.

448fa37 to

d74b586

Compare

|

I've re-check perf with the minihttp example, and same trend as in #2160 (comment) |

A single call to `poll` on a top-level task may potentially do a lot of work before it returns `Poll::Pending`. If a task runs for a long period of time without yielding back to the executor, it can starve other tasks waiting on that executor to execute them, or drive underlying resources. See for example rust-lang/futures-rs#2047, rust-lang/futures-rs#1957, and rust-lang/futures-rs#869. Since Rust does not have a runtime, it is difficult to forcibly preempt a long-running task. Consider a future like this one: ```rust use tokio::stream::StreamExt; async fn drop_all<I: Stream>(input: I) { while let Some(_) = input.next().await {} } ``` It may look harmless, but consider what happens under heavy load if the input stream is _always_ ready. If we spawn `drop_all`, the task will never yield, and will starve other tasks and resources on the same executor. This patch adds a `coop` module that provides an opt-in mechanism for futures to cooperate with the executor to avoid starvation. This alleviates the problem above: ``` use tokio::stream::StreamExt; async fn drop_all<I: Stream>(input: I) { while let Some(_) = input.next().await { tokio::coop::proceed().await; } } ``` The call to [`proceed`] will coordinate with the executor to make sure that every so often control is yielded back to the executor so it can run other tasks. The implementation uses a thread-local counter that simply counts how many "cooperation points" we have passed since the task was first polled. Once the "budget" has been spent, any subsequent points will return `Poll::Pending`, eventually making the top-level task yield. When it finally does yield, the executor resets the budget before running the next task. The budget per task poll is currently hard-coded to 128. Eventually, we may want to make it dynamic as more cooperation points are added. The number 128 was chosen more or less arbitrarily to balance the cost of yielding unnecessarily against the time an executor may be "held up". At the moment, all the tokio leaf futures ("resources") call into coop, but external futures have no way of doing so. We probably want to continue limiting coop points to leaf futures in the future, but may want to also enable third-party leaf futures to cooperate to benefit the ecosystem as a whole. This is reflected in the methods marked as `pub` in `mod coop` (even though the module is only `pub(crate)`). We will likely also eventually want to expose `coop::limit`, which enables sub-executors and manual `impl Future` blocks to avoid one sub-task spending all of their poll budget. Benchmarks (see #2160) suggest that the overhead of `coop` is marginal.

The work-stealing scheduler includes an optimization where each worker includes a single slot to store the **last** scheduled task. Tasks in scheduled task is executed next. This speeds up and reduces latency with message passing patterns. Previously, this optimization was susceptible to starving other tasks in certain cases. If two tasks ping-ping between each other without ever yielding, the worker would never execute other tasks. An early PR (#2160) introduced a form of pre-emption. Each task is allocated a per-poll operation budget. Tokio resources will return ready until the budget is depleted, at which point, Tokio resources will always return `Pending`. This patch leverages the operation budget to limit the LIFO scheduler optimization. When executing tasks from the LIFO slot, the budget is **not** reset. Once the budget goes to zero, the task in the LIFO slot is pushed to the back of the queue.

The work-stealing scheduler includes an optimization where each worker includes a single slot to store the **last** scheduled task. Tasks in scheduler's LIFO slot are executed next. This speeds up and reduces latency with message passing patterns. Previously, this optimization was susceptible to starving other tasks in certain cases. If two tasks ping-ping between each other without ever yielding, the worker would never execute other tasks. An early PR (#2160) introduced a form of pre-emption. Each task is allocated a per-poll operation budget. Tokio resources will return ready until the budget is depleted, at which point, Tokio resources will always return `Pending`. This patch leverages the operation budget to limit the LIFO scheduler optimization. When executing tasks from the LIFO slot, the budget is **not** reset. Once the budget goes to zero, the task in the LIFO slot is pushed to the back of the queue.

Motivation

A single call to

pollon a top-level task may potentially do a lot of work before it returnsPoll::Pending. If a task runs for a long period of time without yielding back to the executor, it can starve other tasks waiting on that executor to execute them, or drive underlying resources. See for example rust-lang/futures-rs#2047, rust-lang/futures-rs#1957, and rust-lang/futures-rs#869. Since Rust does not have a runtime, it is difficult to forcibly preempt a long-running task.Consider a future like this one:

It may look harmless, but consider what happens under heavy load if the input stream is always ready. If we spawn

drop_all, the task will never yield, and will starve other tasks and resources on the same executor.Solution

The

preemptionmodule provides an opt-in mechanism for futures to collaborate with the executor to avoid starvation. With opt-in preemption, the problem above is alleviated:The call to [

preempt_check!] will coordinate with the executor to make sure that every so often control is yielded back to the executor so it can run other tasks.Implementation

The implementation uses a thread-local counter that simply counts how many "preemption points" we have passed since the task was first polled. Once the "budget" has been spent, any subsequent preemption points will return

Poll::Pending, eventually making the top-level task yield. When it finally does yield, the executor resets the budget before running the next task.