Revisiting Skeleton-based Action Recognition

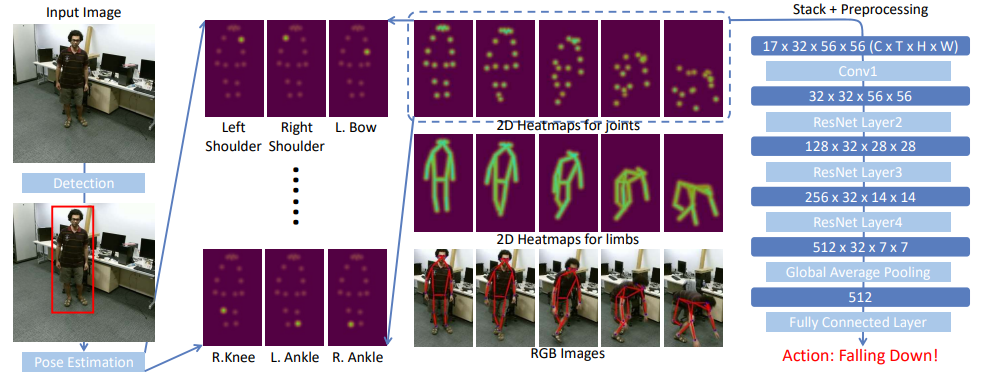

Human skeleton, as a compact representation of human action, has received increasing attention in recent years. Many skeleton-based action recognition methods adopt graph convolutional networks (GCN) to extract features on top of human skeletons. Despite the positive results shown in previous works, GCN-based methods are subject to limitations in robustness, interoperability, and scalability. In this work, we propose PoseC3D, a new approach to skeleton-based action recognition, which relies on a 3D heatmap stack instead of a graph sequence as the base representation of human skeletons. Compared to GCN-based methods, PoseC3D is more effective in learning spatiotemporal features, more robust against pose estimation noises, and generalizes better in cross-dataset settings. Also, PoseC3D can handle multiple-person scenarios without additional computation cost, and its features can be easily integrated with other modalities at early fusion stages, which provides a great design space to further boost the performance. On four challenging datasets, PoseC3D consistently obtains superior performance, when used alone on skeletons and in combination with the RGB modality.

| frame sampling strategy | pseudo heatmap | gpus | backbone | Mean Top-1 | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|

| uniform 48 | keypoint | 8 | SlowOnly-R50 | 93.5 | 10 clips | 20.6G | 2.0M | config | ckpt | log |

| uniform 48 | limb | 8 | SlowOnly-R50 | 93.6 | 10 clips | 20.6G | 2.0M | config | ckpt | log |

| frame sampling strategy | pseudo heatmap | gpus | backbone | top1 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|

| uniform 48 | keypoint | 8 | SlowOnly-R50 | 93.6 | 10 clips | 20.6G | 2.0M | config | ckpt | log |

| uniform 48 | limb | 8 | SlowOnly-R50 | 93.5 | 10 clips | 20.6G | 2.0M | config | ckpt | log |

| Fusion | 94.0 |

| frame sampling strategy | pseudo heatmap | gpus | backbone | top1 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|

| uniform 48 | keypoint | 8 | SlowOnly-R50 | 86.8 | 10 clips | 14.6G | 3.1M | config | ckpt | log |

| frame sampling strategy | pseudo heatmap | gpus | backbone | top1 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|

| uniform 48 | keypoint | 8 | SlowOnly-R50 | 69.6 | 10 clips | 14.6G | 3.0M | config | ckpt | log |

| frame sampling strategy | pseudo heatmap | gpus | backbone | top1 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|

| uniform 48 | keypoint | 8 | SlowOnly-R50 | 47.4 | 10 clips | 19.1G | 3.2M | config | ckpt | log |

You can follow the guide in Preparing Skeleton Dataset to obtain skeleton annotations used in the above configs.

You can use the following command to train a model.

python tools/train.py ${CONFIG_FILE} [optional arguments]Example: train PoseC3D model on FineGYM dataset in a deterministic option.

python tools/train.py configs/skeleton/posec3d/slowonly_r50_8xb16-u48-240e_gym-keypoint.py \

--seed=0 --deterministicFor training with your custom dataset, you can refer to Custom Dataset Training.

For more details, you can refer to the Training part in the Training and Test Tutorial.

You can use the following command to test a model.

python tools/test.py ${CONFIG_FILE} ${CHECKPOINT_FILE} [optional arguments]Example: test PoseC3D model on FineGYM dataset.

python tools/test.py configs/skeleton/posec3d/slowonly_r50_8xb16-u48-240e_gym-keypoint.py \

checkpoints/SOME_CHECKPOINT.pthFor more details, you can refer to the Test part in the Training and Test Tutorial.

@misc{duan2021revisiting,

title={Revisiting Skeleton-based Action Recognition},

author={Haodong Duan and Yue Zhao and Kai Chen and Dian Shao and Dahua Lin and Bo Dai},

year={2021},

eprint={2104.13586},

archivePrefix={arXiv},

primaryClass={cs.CV}

}